Peer reviewed: Seeing Through a Bee's Eye - How Do Pollinators Find Flowers in Complex Environments?

By ADRIAN G. DYER

- View Adrian Dyer's Biography

Assoc. Prof. Adrian G. Dyer is a vision scientist and photographer at the School of Media and Communication, RMIT University and Department of Physiology, Monash University, Melbourne.

Seeing Through a Bees Eye: How Do Pollinators Find Flowers in Complex Environments?

Adrian G. Dyer (School of Media and Communication, RMIT University and Department of Physiology, Monash University)

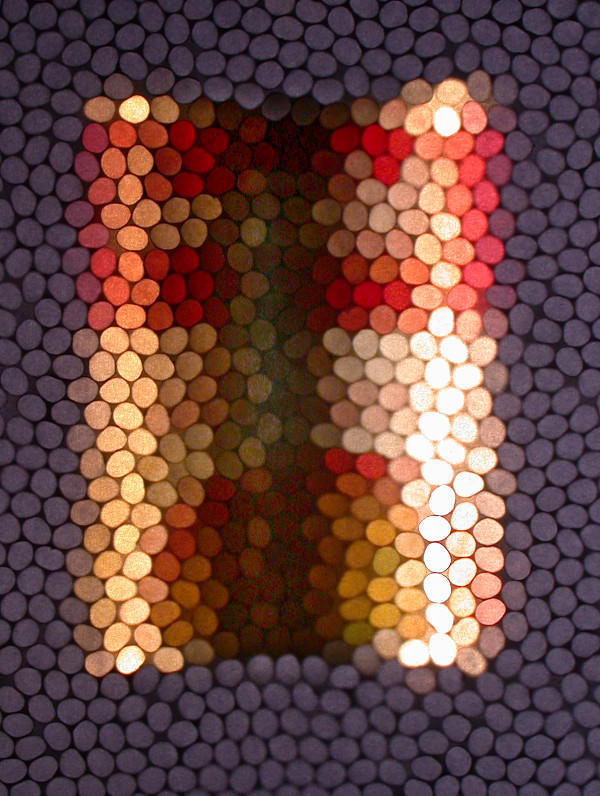

How unlikely is it that we could see a can of soup through the eyes of a bee? Adrian Dyer discusses how this is now possible, revealing a lot about how different individuals see our world. Image by AG Dyer and S Williams - the copyright remains with the artists.

How we perceive the world is very constrained by our sensory apparatus, and how experience allows us to learn about our environment. Bees have a very different visual system to humans; including the capacity to see ultraviolet wavelengths, and images are constructed from a multifaceted compound eye. This system has evolved to become highly efficient at finding flowers in complex natural environments. So what do bees perceive? One hundred year ago the Novel Laureate Karl von Frisch showed bees can see colour, and recently it has been possible to reconstruct photographic representations of how bees see colour and spatial information. Furthermore, it has been possible to do testing with free-flying bees to understand how their visual system can do holistic processing, or enable face recognition, size comparisons, or even bind multiple simultaneous rules. Thus an insect brain with less than one million neurons can teach us a lot about how perception is a combination of both sensory apparatus and individual experience.

Introduction

How an individual perceives the world is a result of complex interactions of the environmental factors like light, subject matter and viewing background, their own sensory capabilities like colour vision and/or spatial resolution (Lee 2005). Colour is an important sensory capability and different individual humans may have different perceptions because of fundamental differences in the spectral sensitivities of their colour photoreceptors, or whether it is night or day (Lee 2005; Kemp et al. 2015). For example, most humans have trichromatic colour vision; that is we possess blue-, green- and red-sensitive cone photoreceptors that operate in 'photopic' environments of high light intensity (daylight, or a well lit room), but in the relative darkness of night we often only have 'scotopic' vision mediated by ‘rod’ receptors that are very sensitive to light (photons) but only permit black and white (achromatic) vision (Lee 2005). Interestingly, some humans only have two classes of cone photoreceptors (e.g. blue- and green-sensitive cones) expressed in the retina of the eye, and such subjects are dichromatic which is a reason for the testing of colour vision in driving tests (Lee 2005); whilst in a few very rare cases some humans are truly colour blind and only have a single class of cone photoreceptors active during daylight illumination conditions (Sacks 1997). Since we can see that even amongst the human population there are differences in perception, it is interesting to ask; how do other animals perceive the world? The answer turns out to be rather complex, and indeed in recent times it has been shown that many different species of animal have very different sensory equipment. If we just consider colour as a sensory perception, most mammals are dichromatic (two colour receptors), birds and goldfish are tetrachomatic, whilst many butterfly species may have different number of colour photoreceptors (Kemp et al. 2015). One hundred years ago it was shown that honeybees had a capacity for colour vision when Karl von Frisch trained bees to visit a coloured card to collect a mixture of sugar and water, and he showed bees could choose the target colour even when presented with a number of grey cards surrounding it (the definition for colour became a capacity to discriminate between visual stimuli based on their wavelength distributions independent of total intensity; Frisch 1914; Kemp et al. 2015). Karl von Frisch went on to win a Nobel Prize for his lifetime work that had established the honeybee as an important model of how sensory processing works in nature. One hundred years after the first demonstration of colour learning in honeybees, I discuss how we currently understand visual perception in this important animal model, and how this knowledge opens new possibilities for our understanding of using visual communication in fields as wide as art, photography and technology.

Colour perception from a miniaturized brain

One of the most exciting early discoveries about the colour perception of honeybees was that they respond to wavelengths of 'light' beyond the normal sensory capabilities of human blue-green-red trichromatic vision. Specifically, it was shown that bees could be trained to discriminate ultraviolet (UV) wavelengths that could not be detected by humans (Kuhn 1927), and further experiments showed that bees obeyed classic rules of colour mixing that had been developed to describe colour perception in humans (Daumer 1956). Early work also showed that flowers often contained petals that reflected radiation (Richtmyer 1923), and the possibility that flowers had evolved specific spectral signals to suit the perception of important pollinators like bees emerged. Karl Daumer used an ultraviolet sensitive camera set up to record the reflectance of several plant flowers, revealing that when outer petals reflected UV there was often a strong absorbance of radiation from the inner parts of the flowers creating a bulls-eye effect as a potential signal (Daumer 1958). However, it was not until Autrum and von Zwehl (1964) measured (using electrophysiological recordings) the spectral sensitivities of honeybees, was it known for sure that bee vision was based on an UV-, BLUE- and GREEN-sensitive visual system, and experiments by von Helversen (1972) measured the relative spectral discrimination abilities of honeybees showing the areas of best discrimination lay in the regions of the spectrum between the UV-, BLUE- and GREEN- sensitive photoreceptors. These important breakthroughs led to honeybees becoming a mainstay model for understanding colour perception, and subsequent investigations showed bee brains contained opponent neural mechanisms (Kien and Menzel 1977; Yang et al. 2004; Dyer et al. 2011) that had previously been shown to be important to support colour perception in humans (Lee 2005). Interestingly, like humans, honeybees cannot perceive colour at low light intensities (Menzel 1981); although the nocturnal Indian carpenter bee Xylocopa tranquebarica has been shown to use colour vision in very dim light (Somanathan et al. 2008).

Once it was well established that honeybees had trichromatic vision and the basic principles of how colour was processed in the brain was understood, it became possible to construct models of bee colour perception (Chittka 1992), and to start evaluating how bees see color reflected from biologically significant signals like flowers that provide the mainstay of bee nutrition (pollen and nectar). This question gives important insights into classic signal-receiver relationships in evolution (Lythgoe 1979). By carefully measuring the spectral reflectance ‘signature’ of different flowers in the Northern Hemisphere and comparing how the spectral signals have sharp changes in reflectance properties (a key factor in determining an objects colour) relative to the spectral discrimination abilities of honeybees (described above), it was possible to show that flowers had often evolved spectral signals (including UV) to fit the colours best discriminated by bee vision (Chittka and Menzel 1992; Chittka 1996). Importantly, subsequent work showed that most bee species tested share the same UV-, BLUE- and GREEN- sensitive colour visual system as honeybees, including stingless bees are native to Australia (Chittka 1996; Briscoe and Chittka 2001). Australia is an important case study due to its long term geological separation from other ancient continents by a significant sea barrier, and when a similar pattern of flower colour evolution was observed in Australia it showed that multiple times flowers had independently evolved signals to suit bee vision (Dyer et al. 2012; Shrestha et al. 2013). This work has recently been extended to how bees interact with flowers in different climatic conditions like mountains, establishing the importance of this colour signal-receiver system for understanding the potential effects of climate change on complex plant pollinator systems (Bischoff et al. 2013; Shrestha et al. 2014; Dyer at al. 2015).

A whole image from a faceted compound eye

The eye of an insect like a bee is very different to our own lens eye, in that bees have an apposition compound eye made of many different ommatidia (Land and Chittka 2013). The number of ommatidia in a honeybee is about 5000, although in different bee species this can vary, and it may even vary within some species (Land and Chittka 2013). Each ommatidium samples only a small visual angle from a scene, but the brain of the bee will perceive multiple inputs from all the ommatidia and a leading question in our understanding of bee perception was whether their visual system simply processed only localized information about elements from a few ommatidia (Horridge 2009), or the brain was able to bind information to create a holistic representation of an entire scene (Avargue`s-Weber et al. 2010). This is not a straightforward problem, since in human perception it has been appreciated that there is often great complexity in how visual perception works; which is nicely encapsulated in the studies by David Navon (Navon 1977, 1981) with a range of very famous experiments on whether humans first perceive the ‘trees before the forest’, or the ‘forest before the trees’. Whilst humans have a preference for global ‘forest before the trees’ processing; this can be modulated through attentional mechanisms (Schwarzkopf and Rees 2011). Recently it has been possible to conduct analogous tests on honeybees, and show that after training to target and distractor stimuli containing hierarchical compound stimuli (stimuli that contained both local and global information content) bees could learn both local cues within a scene, but also bind this information together to successfully recognize global constructs that were outlined by the local cues (Avargue`s-Weber et al. 2015). Bees actually preferred global cues, and could transfer their choices to novel stimuli that maintained a global construct but did not contain any local cues. Furthermore, by priming the visual system of the bees, attention could be directed towards local cues, showing plasticity in the brain for complex problem solving, and that a form of attention can be modulated with individual experience (Avargue`s-Weber et al. 2015). Thus, consistent with other recent reports showing bees can configure multiple components of visual information to solve novel problems (Avargue`s-Weber et al. 2010; Avargue`s-Weber et al. 2011; Avargue`s-Weber et al. 2012), when a bee sees a complex scene it has a capacity to process information holistically. It is also known from recent studies that bees can learn size relationships between meaningful objects within a scene (Avargue`s-Weber et al. 2014), or can learn to apply complex rules to solve problems. For example, bees can learn above/below relationship rules (e.g. is the rewarding flowering bush above or below a fence-line; Avargue`s-Weber et al. 2011; Chittka and Jensen 2011), or even combine multiple rules to make decisions in complex environments (Avargue`s-Weber et al. 2012).

The photographic representation of bee vision

As we have seen above, bees have a capacity to process complex spatial information holistically, and to see colour using different wavelengths to human vision. Indeed bee colour vision facilitates very fine colour discrimination, and their capacity to resolve colour differences is consistent (Dyer and Neumeyer 2005) with that of human vision (bearing in mind spectral sensitivity differences).

It is possible to construct a mechano-optical ‘artificial compound eye’ simply by arranging about 5000 thin black tubes (e.g. drinking straws) into an array (Knowles and Dartnall 1977; Williams and Dyer 2007), such that the length of each straw, and the array as a whole, resolves information close to the limit acuity of bee vision defined by a modulation transfer function which has been measured in behavioral experiments for honeybees (Srinivasan and Lehrer 1988). This photographic system nicely represents the spatial acuity of bee vision by using a principle close to how different ommatidia operate in a bee eye (Knowles and Dartnall 1977; Williams and Dyer 2007).

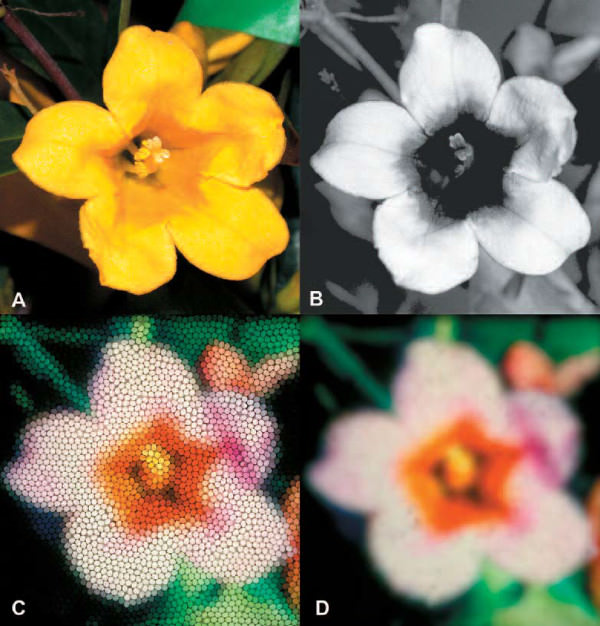

Understanding these principles allows us to create a photographic representation of how the visual system of a bee may view a flower that reflects UV light from the outer part of the petals, but absorbs UV from the inner parts of the flowers (Fig.1B). This is possible by using a camera that has sensitivity (either film or digital sensor) to short wavelength UV light that a bee can see, a quartz lens that transmits UV (or specialized glass lens), a UV transmitting (visible blocking) filter; as well a capacity to capture blue and green wavelengths of light filtered by standard Kodak Wratten filters (Williams and Dyer 2007). When a flower is then photographed (Fig 1A-D) using a light source rich in visible and UV wavelengths (like typical daylight), it is possible to manipulate the resulting UV-, BLUE- and GREEN- camera ‘channels’ (representing bee vision) to be displayed with a wavelength shift of about 100 nm to blue, green and red to allow us to see a representation of how a bee would see a (human) yellow flower (Gelsemium sempervirens) as two coloured. The resulting false colour image can be displayed on a conventional colour calibrated computer monitor, and then re-photographed through the mechano optical device described above (Fig 1C). And since we know bees can holistically process information in a scene, a Gaussian blur filter can be applied (to remove fine spatial information about the space between straws), providing a representation of bee vision that is consistent with how we understand their sensory perception based on behavior, physiology and optics as described above (Fig 1D).

With the advent of high resolution digital cameras, including UV sensitivity in some specialized models (Garcia et al. 2013), it is now also possible to apply physics principles to linearize the spectral characteristics of the sensor to provide very precise information about the quantity of light (photons) reaching the visual system of a bee from a complex scene like a field of flowers (Garcia et al. 2014). This new photographic system thus promises to allow even finer grain understandings of how other animals (bees are currently the best studied, non-primate, animal model) perceive and interact with their environment, which promises to potentially provide new insights for machine vision or other advanced imaging applications in complex environments (Sandin et al. 2014). As we improve these techniques for representing and modeling animal vision, our understanding of nature moves from in the dark, to a bright and enriched appreciation that many animals have their own special sensory capabilities that can teach us much about the world in which we live.

Bee learning in complex environments

Once it was possible to build representations of how a bee can see their world, it was possible to gain a much better understanding of the types of information bees might extract and use in complex environments (Dyer et al. 2008). One of the biggest surprises when the mechano optical device was used to survey complex objects, was that bee vision is predicted to be able to see much finer detail than expected; for example, the inner features (nose, mouth, eyes) of a human face. Indeed, when subsequent behavioral experiments trained individual bees to see if they could learn to discriminate between faces, and recognize a learnt target face from similar novel distractors, the results were very surprising. Honeybees were able to reliably learn this task (Dyer et al. 2005), and even learn to recognize faces considering changes in viewpoint (Dyer and Vuong 2008). The mechanism bees used to learn such complex visual tasks was to configure the available local information into a full representation of the stimulus (Avargue`s-Weber et al. 2010), a mechanism recently also shown to be possible in some wasp species (Sheehan and Tibbetts 2011; Chittka and Dyer 2012; Tibbetts and Dyer 2013). Bees could also learn other complex but novel stimuli like recognizing or categorizing human painting styles (Wu et al. 2013, Chittka and Walker 2006); and the face learning experiments suggested a sophisticated capacity to successfully categorise information (e.g. face group vs no face group), consistent with evidence bees can also categorise complex biologically meaningful stimuli like flower type, trees or landscapes (Zhang et al. 2004).

Operating in complex environments

One of the problems of viewing the world in detail is that there is often just too much information to process, and in human perception this is typically managed by attention mechanisms where attention is directed to a point within a scene to where the eyes fixate (either through bottom up saliency mechanisms or top down volitional mechanisms; Martinez-Conde et al. 2004). So, given that bees can holistically process multiple components of a scene (Avargue`s-Weber et al. 2015), how does their visual system deal with the problem of potentially processing too much complexity? It appears that bees may also use attentional type mechanisms, since learning of fine colour or spatial tasks is enhanced by experience (Giurfa 2004; Avargue`s-Weber et al. 2010; Avargue`s-Weber et al. 2015), and bees presented with multiple options in a complex scene (e.g. a simulated foraging field of flowers) do have to process individual elements (Spaethe et al. 2006; Morawetz and Spaethe 2012). This is shown by changes in search time of individual bees depending upon the number of ‘flower’ elements present in a simulated search meadow, and indeed there is evidence of between species differences where honeybees can only use a relatively slow serial search mechanism, but bumblebees appear to have evolved to use a parallel mechanism analogous to visual search in human subjects (Morawetz and Spaethe 2012; Bukovac et al. 2013). These latest discoveries teach us a lot about visual perception, as bees with a miniature brain of less than 1 million neurons can often perform tasks close to the limit of what a large mammalian brain permits (Chittka and Niven 2009). So 100 years after bees moved out of an assumed world of greyness, and into human consciousness as an animal that can use colour to operate in complex environments, we still find that their perception holds valuable lessons for understanding how and why we see the world the way that we do.

Figures

[reproduced from Williams and Dyer (2009); copyright remains the property of AG Dyer and S Williams]

Figure 1: A representation of how a bee may view a flower, based upon available data on honeybee physiology and behaviour. (A) A conventional colour photograph of a flower of Caroline Jessamine (Gelsemium sempervirens). (B) A photograph of the flower through an 18A UV transmitting filter that excludes visible light. (C) Image captured through an array of ray selectors to simulate insect compound vision. The image also represents a false colour shift of wavelengths so that bee-UV is displayed as blue, bee-BLUE is displayed as green and bee-GREEN is displayed as red. (D) Image processing has also been applied to remove high spatial frequency information to fit with the neural wiring of the apposition eyes in honeybees, and evidence that bees process information holistically. This image thus provides a simulation of how a bee would view a flower, considering both the spatial and colour properties of the insect’s compound eye.

Acknowledgements

I and grateful to the ARC for a QEII Fellowship to conduct much of the research encapsulated in this review (DP0878968).

References

Autrum H, von Zwehl V. "Spektrale Empfindlichkeit einzelner Sehzellen des Bienenauges". Z vergl Physiol 48.4 (1964):357-384.

Avarguès-Weber A, Portelli G, Benard J, Dyer A, Giurfa M. "Configural processing enables discrimination and categorization of face-like stimuli in honeybees". Journal of Experimental Biology 213 (2010):593-601.

Avarguès-Weber A, Dyer AG, Giurfa M. "Conceptualization of above and below relationships by an insect". Proceedings of the Royal Society London B 278 (2011):898-905.

Avarguès-Weber A, Dyer AG, Combe M, Giurfa M. "Simultaneous mastering of two abstract concepts by the miniature brain of bees". Proceedings of the National Academy Science (USA) 109 (2012):7481-7486.

Avarguès-Weber A, d’Amaro D, Metzler M, Dyer AG. "Conceptualization of relative size by honeybees". Frontiers in Behavioural Neuroscience 8 (2014):80.

Avargue`s-Weber A, Dyer AG, Ferrah N, Giurfa M. "The forest or the trees: preference for global over local image processing is reversed by prior experience in honeybees". Proceedings of the Royal Society London 282 (2015):20142384. http://dx.doi.org/10.1098/rspb.2014.2384

Bischoff M, Lord JM, Robertson AW, Dyer AG. "Hymenopteran pollinators as agents of selection on flower colour in the New Zealand mountains: salient chromatic signals enhance flower discrimination". New Zealand Journal of Botany 51 (2013):181-193.

Briscoe A, Chittka L. "The evolution of colour vision in insects." Annual Review of Entomology 46 (2001):471-510.

Bukovac Z, Dorin A, Dyer AG. "A-bees see: a simulation to assess social beevisual attention during complex search tasks". In: Liò, et al. (Eds.), Proceedings of the 12th European Conference on Artificial Life (ECAL 2013). MIT Press, Taormina, Italy, September 2–6, pp. 276–283.

Chittka L. "Does bee colour vision predate the evolution of flower colour?" Naturwissenschaften 83 (1996):136-138

Chittka L, Walker J. "Do bees like Van Gogh’s Sunflowers?" Optics and Laser Technology 38 (2006): 323-328.

Chittka L, Niven J. "Are Bigger Brains Better?" Current Biology 19 (2009): R995-R1008.

Chittka L, Jensen K. "Animal Cognition: Concepts from Apes to Bees". Current Biology 21 (2011): R116-R119.

Chittka L. "The color hexagon: a chromaticity diagram based on photoreceptor excitations as a generalized representation of colour opponency". Journal of Comparative Physiology A 170 (1992): 533-543.

Chittka L, Menzel R. "The evolutionary adaptation of flower colors and the insect pollinators' color vision systems". Journal of Comparative Physiology A 171 (1992):171-181.

Chittka L, Dyer AG. "Your face looks familiar". Nature 481 (2012):154-155. Daumer K "Reizmetrische Untersuchungen des Farbensehens der Bienen". Z vergl Physiol 38 (1956):413–478

Daumer K. "Blumenfarben, wie sie die Bienen sehen". Z Vergl Physiol 41 (1958):49–110.

Dyer AG, Neumeyer C. "Simultaneous and successive colour discrimination in the honeybee (Apis mellifera)". Journal of Comparative Physiology A 191 (2005):547-557.

Dyer AG, Neumeyer C, Chittka L. "Honeybee (Apis mellifera) vision can discriminate between and recognise images of human faces". Journal of Experimental Biology 208 (2005):4709-4714.

Dyer AG, Vuong QC. "Insect brains use image interpolation mechanisms to recognise rotated objects". PLoS ONE 3 (2008):e4086. (doi:10.1371/journal.pone.000 4086)

Dyer AG, Rosa MGP, Reser DH. "Honeybees can recognise images of complex natural scenes for use as potential landmarks". Journal of Experimental Biology 211 (2008):1180-1186.

Dyer AG, Paulk AC, Reser DH. "Colour processing in complex environments: insights from the visual system of bees". Proceedings of the Royal Society London B 278 (2011):952-959

Dyer AG, Boyd-Gerny S, McLoughlin S, Rosa MGP, Simonov V, Wong BBM. "Parallel evolution of angiosperm colour signals: common evolutionary pressures linked to hymenopteran vision". Proceedings of the Royal Society London B 279 (2012):3606-3615

Dyer AG, Garcia JE, Shrestha M, Lunau L. " Seeing in colour: A hundred years of studies on bee vision since the work of the Nobel laureate Karl von Frisch ". Proceedings of the Royal Society Victoria (in press, accepted 23 January 2015).

Frisch K von. "Der Farbensinn und Formensinn der Biene". Zool Jb Physiol 37 (1914):1-238

Garcia Mendoza J, Dyer AG, Greentree A, Spring G, Wilksch P. "Linearisation of RGB camera responses for quantitative image analysis of visible and UV photography: A comparison of two techniques". PLoS ONE 8 (2013): 1-10 ISSN: 1932-6203.

Garcia JE, Greentree AD, Shrestha M, Dorin A, Dyer AG. "Flower colours through the lens: Quantitative measurement with visible and ultraviolet photography". PLoS ONE 9 (2014): e96646. doi:10.1371/journal.pone.0096646.

Giurfa M. "Conditioning procedure and color discrimination in the honeybee Apis mellifera". Naturwissenschaften 91 (2004):228-231.

Helversen O von. "Zur spektralen Unterschiedsempfindlichkeit der Honigbiene". Journal of Comparative Physiology 80 (1972):439-472.

Horridge A. "What does an insect see?" Journal of Experimental Biology 212 (2009):2721-2729.

Kemp DJ, Herberstein ME, Fleishman LJ, Endler JA, Bennett ATD, Dyer AG, Hart NS, Marshall J, Whiting MJ. "An integrative framework for the appraisal of coloration in nature". American Naturalist (in press accepted 14 Jan 2015).

Kien J, Menzel R. "Chromatic properties of interneurons in the optic lobes of the bee II. Narrow band and colour opponent neurons". Journal of Comparative Physiology A 113 (1977):35-53

Knowles A, Dartnall HJA. "The Photobiology of Vision". (1977) London, Academic Press.

Kühn A. "Über den Farbensinn der Bienen". Z vergl Physiol 5 (1927):762-800.

LandM, Chittka L. "Vision. In: The Insects: Structure and Function" 5th Edition (eds. Simpson, S. J. and Douglas, A. E.). Cambridge: Cambridge University Press, (2013):708-737.

Lee HC. "Introduction to Color Imaging Science". Cambridge University Press, Cambridge (2005).

Lythgoe JN. "The ecology of vision". (1979) Oxford: Clarendon Press.

Martinez-Conde, S, Macknik, SL, Hubel DH. "The role of fixational eye movements in visual perception". Nature Neuroscience 5 (2004):229-240.

Menzel R. "Achromatic vision in the honeybee at low light intensities". Journal of Comparative Physiology A, 141 (1981): 389-393

Morawetz L, Spaethe J. "Visual attention in a complex search task differs between honeybees and bumblebees". Journal of Experimental Biology 215 (2012): 2515-2523.

Navon D. "Forest before trees: the precedence of global features in visual perception". Cognitive Psychology 9 (1977):353-383.

Navon D. "The forest revisited: more on global precedence". Psychological Research 43 (1981):1-32.

Richtmyer FK. "The reflection of ultraviolet by flowers". Journal of the Optical Society of America 7 (1923):151-156.

Sandin F, Khan AI, Dyer AG, Amin AHM, Indiveri G, Chicca E, Osipov E. "Concept Learning in Neuromorphic Vision Systems: What Can We Learn from Insects?" Journal of Software Engineering and Applications 7 (5) (2014): Article ID:45803, 9 pages.

Sacks O ."The Island of the Colorblind". (1997) A.A. Knopf. (pub) ISBN 978-0-676-97035-7.

Schwarzkopf DS, Rees G ."Interpreting local visual features as a global shape requires awareness". Proceedings of the Royal Society London B 278 (2011):2207-2215.

Sheehan MJ, Tibbetts EA. "Specialized Face Learning Is Associated with Individual Recognition in Paper Wasps" Science 334 (2011):1272-1275

Shrestha M, Dyer AG, Boyd-Gerny S, Wong BBM, Burd M. "Shades of red: bird pollinated flowers target the specific colour discrimination abilities of avian vision". New Phytologist 198 (2013):301-310.

Shrestha M, Dyer AG, Bhattarai P, Burd M. "Flower colour and phylogeny along an altitudinal gradient in the Himalayas of Nepal". Journal of Ecology 102 (2014):126-135.

Somanathan H, Borges RM, Warrant EJ, Kelber A. "Nocturnal bees learn landmark colours in starlight". Current Biology 18 (21) (2008):R996-R997.

Spaethe J, Tautz J, Chittka L. "Do honeybees detect colour targets using serial or parallel visual search?" Journal of Experimental Biology 209 (2006):987-993.

Srinivasan MV, Lehrer M. "Spatial acuity of honeybee vision and its spectral properties". Journal of Comparative Physiology A 162 (1988):159-172.

Tibbetts E, Dyer AG. "A face to remember". Scientific American 309 (2013): 62-67. Williams S, Dyer AG "A photographic simulation of insect vision". Journal of Ophthalmic Photography 29 (2007):10-14.

Wu W, Moreno A, Tangen J, Reinhard J. "Honeybees can discriminate between Monet and Picasso paintings". Journal of Comparative Physiology A 199 (2013):45-55

Yang EC, Lin HC, Hung YS. "Patterns of chromatic information processing in the lobula of the honeybee, Apis mellifera L." Journal of Insect Physiology 50 (2004):913-925

Zhang SW, Srinivasan MV, Zhu H, Wong J. "Grouping of visual objects by honeybees". Journal of Experimental Biology 207 (2004):3289-3298.